Quick Review: SpQR: A Sparse-Quantized Representation for Near-Lossless LLM Weight Compression

作者:XD / 发表: 2023年12月6日 23:57 / 更新: 2023年12月6日 23:57 / 科研学习 / 阅读量:1804

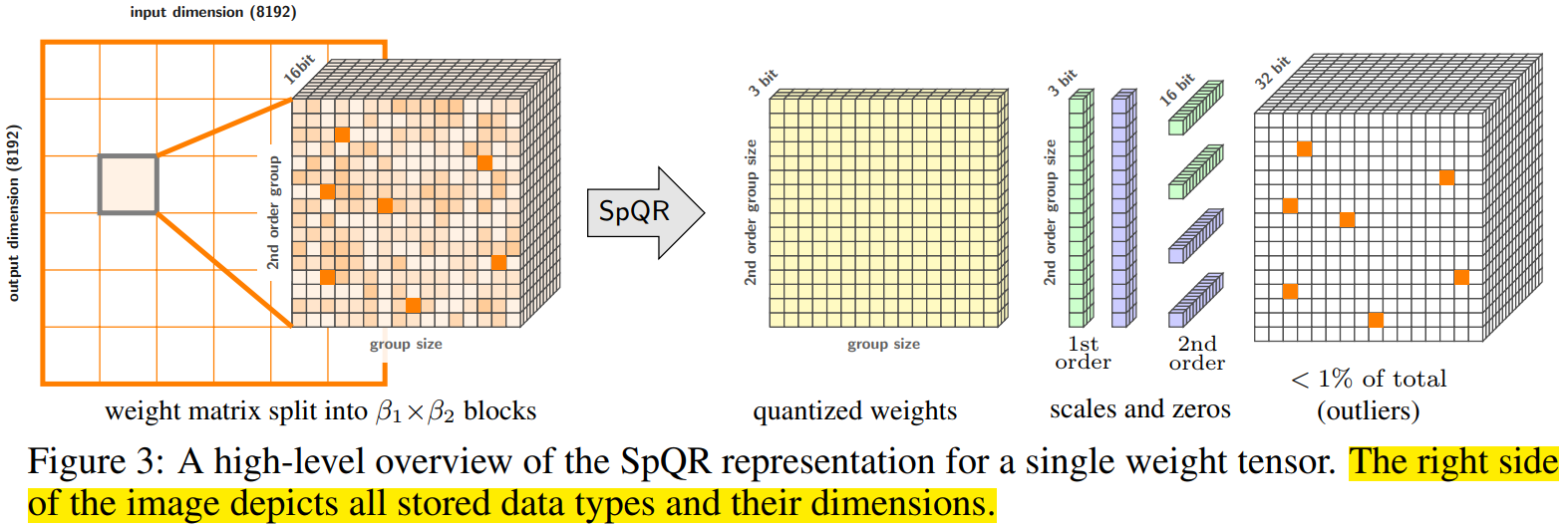

SpQR: A Sparse-Quantized Representation for Near-Lossless Large Language Model Weight Compression

- Paper: SpQR on arXiv

- Code: SpQR on GitHub

- Organization: University of Washington

Core Approach:

- GPTQ without Outliers: Focuses on eliminating outliers during the GPTQ process, enabling more efficient and accurate weight compression for large language models.

相关标签