Quick Review: Optimize Weight Rounding via Signed Gradient Descent for the Quantization of LLMs

作者:XD / 发表: 2023年12月6日 23:51 / 更新: 2023年12月7日 00:55 / 科研学习 / 阅读量:1600

Optimize Weight Rounding via Signed Gradient Descent for the Quantization of Large Language Models

- Paper: Optimize Weight Rounding on arXiv

- Code: Intel Neural Compressor on GitHub

- Organization: Intel

Key Feature:

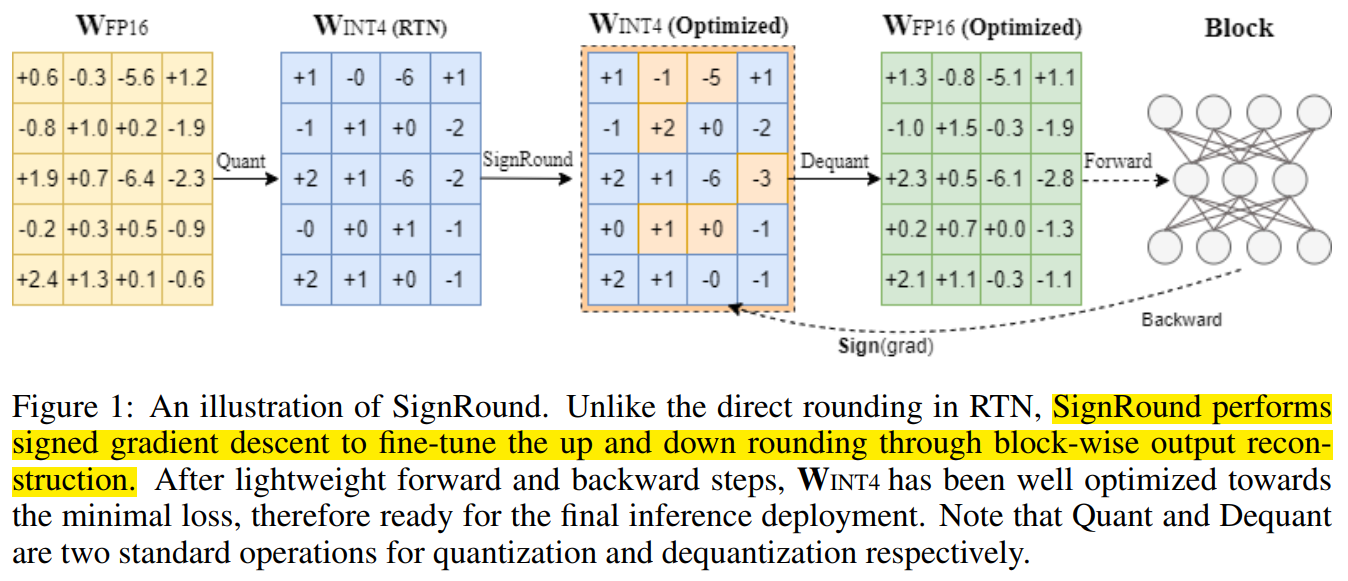

- Adaptive Weight Rounding: Utilizes backward optimization to dynamically adjust the quantized integer values, either rounding them up or down, to optimize the model's performance during quantization.

相关标签