Quick Review: Norm Tweaking: High-performance Low-bit Quantization of Large Language Models

作者:XD / 发表: 2023年12月6日 23:44 / 更新: 2023年12月6日 23:52 / 科研学习 / 阅读量:1818

Norm Tweaking: High-performance Low-bit Quantization of Large Language Models

- Paper: Norm Tweaking on arXiv

- Code: None available

- Organization: Meituan

Steps for Implementation:

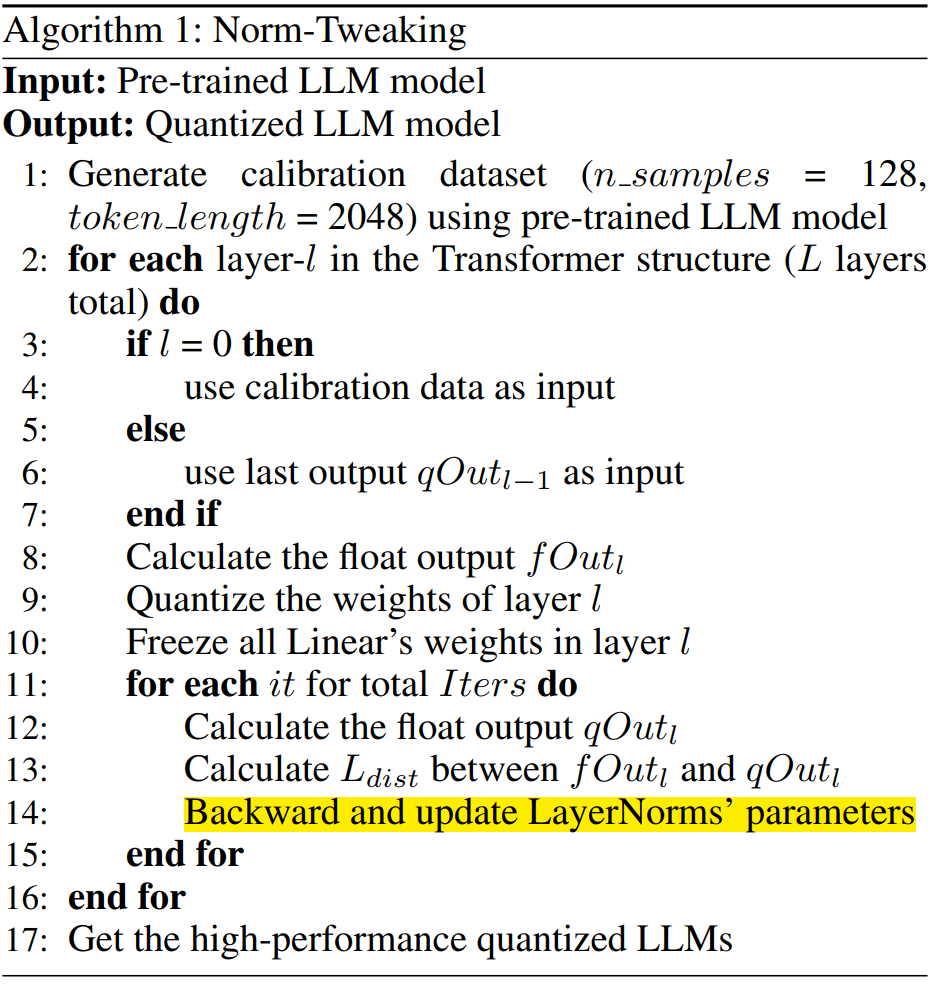

- Generate Data: Prepare and preprocess the dataset suitable for training the model.

- GPTQ: Apply GPTQ method for optimizing the quantization precision of model parameters.

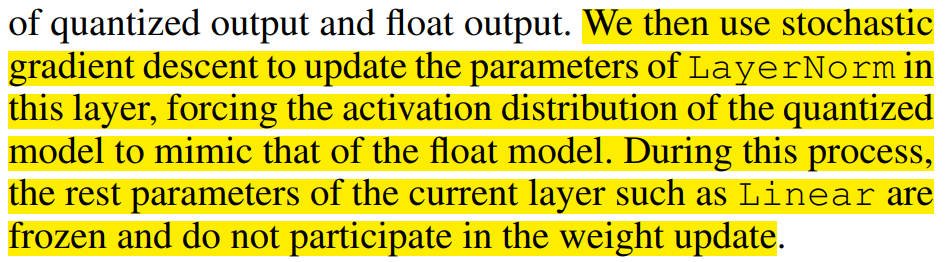

- Train LayerNorm Only: Focus on training the Layer Normalization component of the model for fine-tuning and optimization.

相关标签