Quick Review: SmoothQuant: Accurate and Efficient Post-Training Quantization for LLMs

作者:XD / 发表: 2023年12月7日 00:45 / 更新: 2023年12月7日 00:57 / 科研学习 / 阅读量:1893

SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models

- Paper: SmoothQuant on arXiv

- Code: SmoothQuant on GitHub

- Organization: MIT

Highlight:

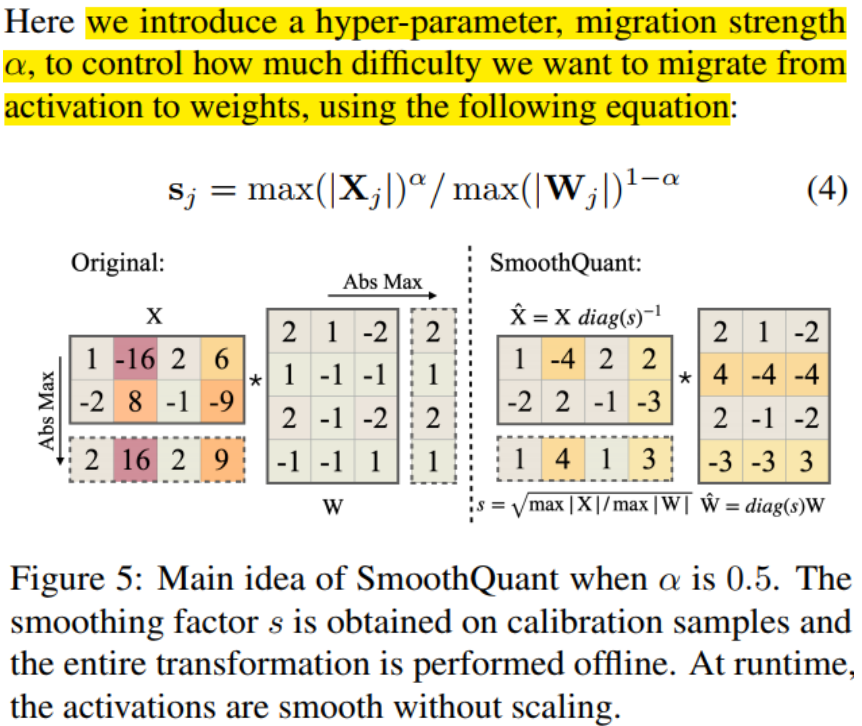

- Hyper-parameter for Outliers: Implements a novel approach using a specific hyper-parameter to manage outliers effectively during the quantization process.

相关标签